ML Pop-Art

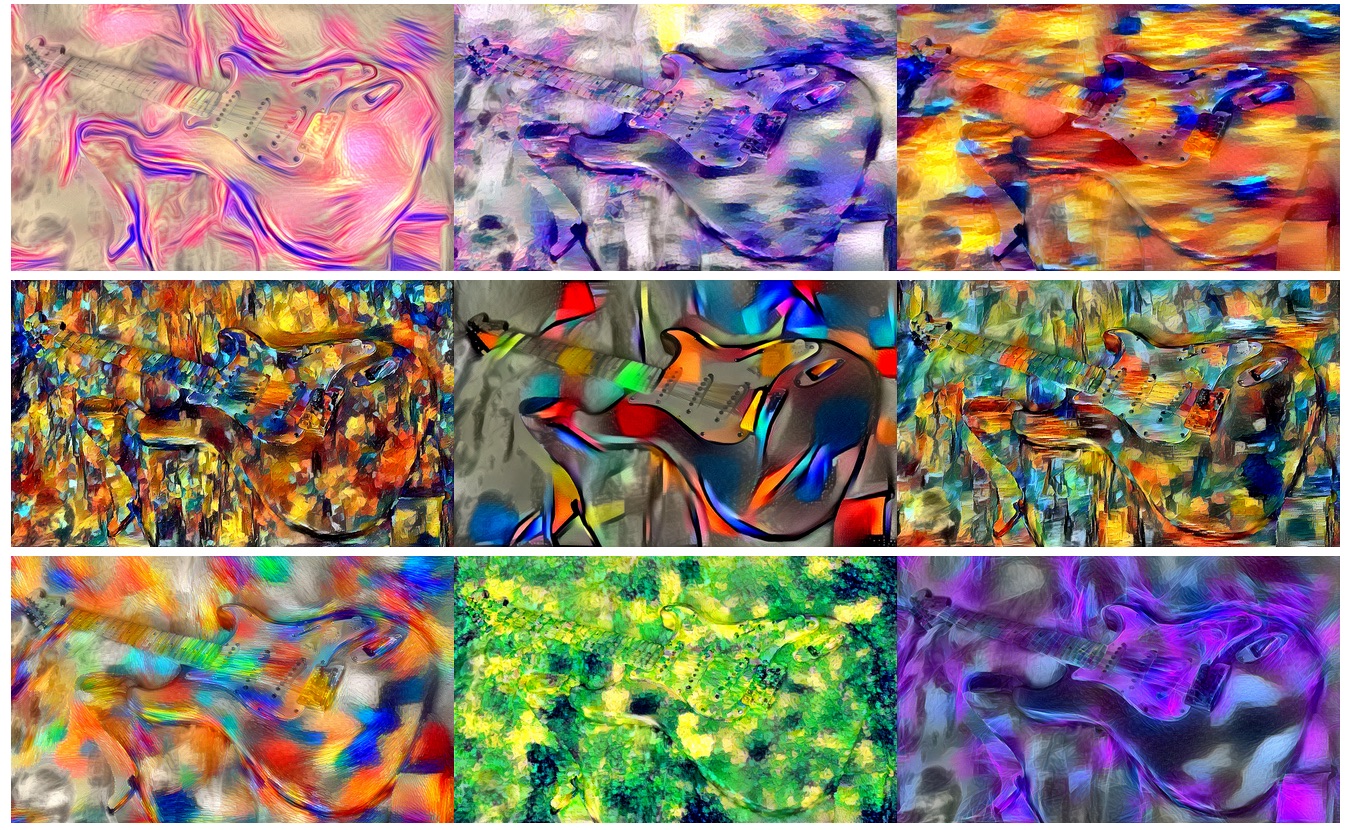

Some time ago I ran across a paper describing the machine-learning application of transferring the art-style from a painting into another image. Mathematica v12 includes a built-in command to do this in a single line of code, so I started using it to explore ideas until I had something I liked, which ended up being a “Pop-Art” Machine Learning application for photos.

Development

The idea was to take a photo, and then apply different artstyles or textures borrowed from other images onto it, so that we could then take slices from it and arrange them side by side. As an example, we start with this image:

Which is loaded by the following code:

(* File: main.nb *)

{imagesSize, styleWeight, goal, overlayWeight} = {1500, .8, "Quality", .25};

(*Load images*)

SetDirectory[NotebookDirectory[]];

input = Import["./in.jpg"];

imgOriginal = ImageResize[input // ImageAdjust, imagesSize] // ColorConvert[#, "Grayscale"] &;

Similarly, we load all the artsyles we want transferred:

(* File: main.nb *)

styleFilenames = FileNames[{"*.jpg", "*.png"}, "./styles"];

stylesRaw = Import[#] & /@ styleFilenames;

styles = ImageResize[#, imagesSize] & /@ stylesRaw;

Then, we apply the art-style transfer process (takes a really long time):

(* File: main.nb *)

Table[

(*Transfer*)

img = ImageRestyle[imgOriginal

,{styleWeight -> styles[[i]]}

,PerformanceGoal -> goal

];

(*Export*)

Export[("./batch/pop" <> StringPadLeft[ToString[i], 3, "0"] <>".jpg")

,img

,ImageSize -> 2*imagesSize

];

(*Memory clear*)

ClearSystemCache[]; Clear[img];

,{i, 1, Length[styles]}];

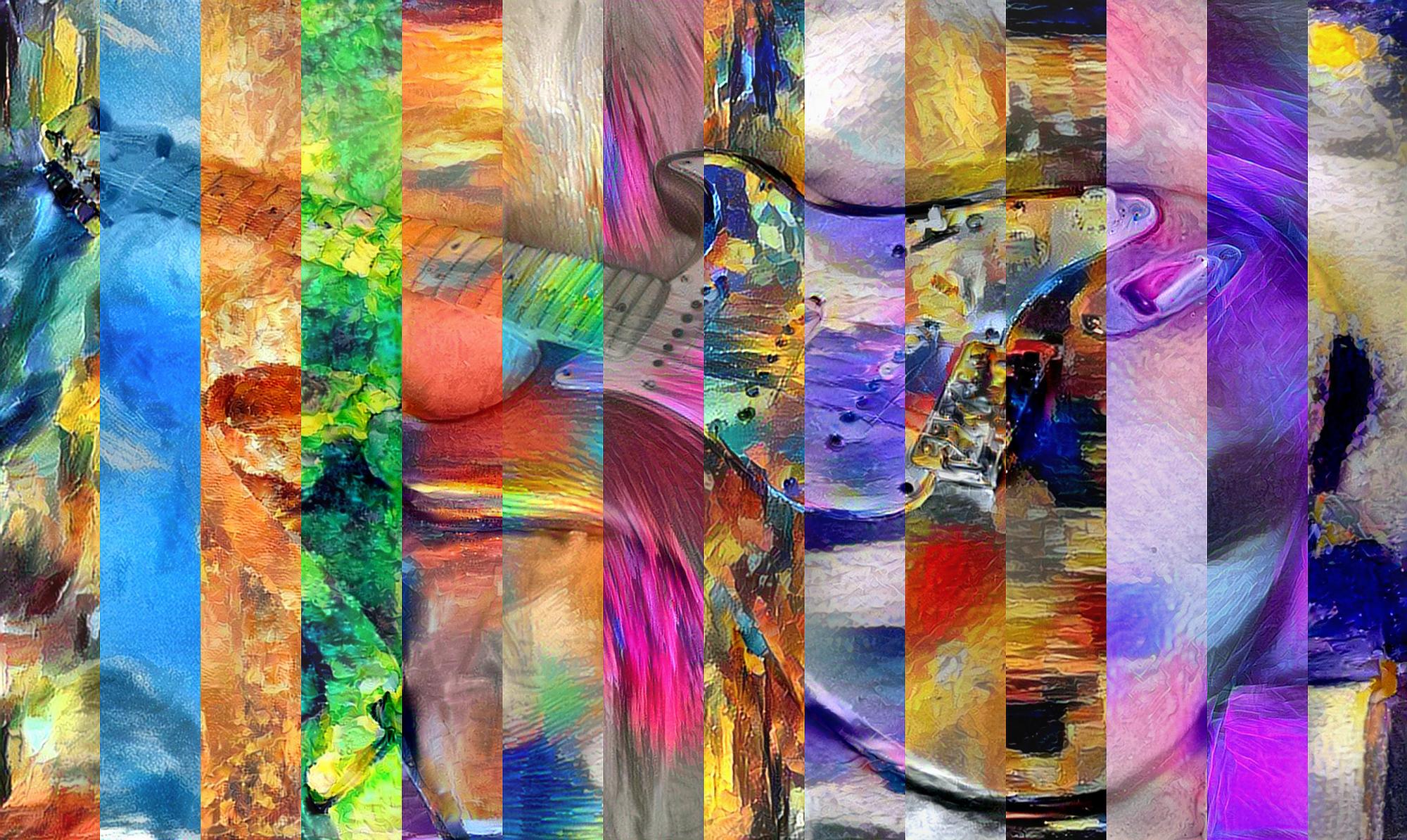

Finally, we put it back together by taking random slices from each of the style-transferred results:

(* File: main.nb *)

filenames = FileNames[{"pop*.jpg", "pop*.png"}, "./finals/"];

imgs = RandomSample[ImageResize[Import[#], imagesSize] & /@ filenames];

{width, height} = ImageDimensions[imgOriginal];

{deltaV, deltaH} = {Round[width/Length[imgs]], Round[height/Length[imgs]]}

slices = Table[

probe = imgs[[i + 1]];

ImageTake[probe, {0, height}, {deltaV*i, deltaV*i + deltaV}]

, {i, 0, (imgs // Length) - 1}];

merged = ImageCompose[ImageAssemble[slices], {imgOriginal, overlayWeight}] // Sharpen[#, sharpen] &;

Export["./art/vertical.jpg", merged // ImageAdjust // Sharpen[#, sharpen] &, ImageSize -> imagesSize]

And we have our assembled image:

Further Thoughts

As it stands, the process is extremely inefficient in terms of computation. We don’t really need to process the whole image if we are gonna take only one slice at the end. It would also work faster if it was coded in Python with available packages.

Documentation and Code

- Repository: Github repo

- Dependencies:Mathematica v12